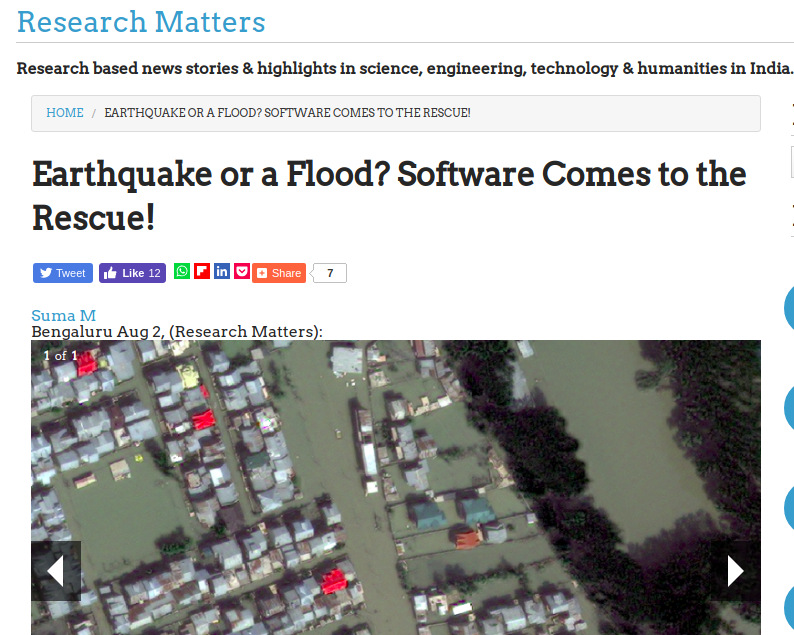

Satellite images are a rich source of data and can play a significant role during a disaster scenario in planning and mitigation activities. The images captured here contain low-level features like colour, texture and shape, which are understood by the system, but hardly make sense for the user. For example, a user may be interested in a search for ‘flooded residential areas’. Since the images capture the flooded areas with a different colour or texture, the system fails to understand the meaning of it and hence the user is forced to form a query like ‘show me all the grey coloured regions’. In the real world scenario, such queries which lacks semantic knowledge is of no sense in the real world.

Our SIIM (Semantic Image Information Mining) framework provides information about the contents of an image like identifying a building, farmland or empty land, along with its spatial and directional relationship with its surroundings. In a post-flood disaster scenario, such information can be used to find all buildings that are surrounded by water and has a road that is unaffected or partially flooded.

This work was appreciated by the Research Matters and subsequently published as an article by them.

More details of the work can be found here.